Service Core - A .NET Core Microservice backend

Hi! My name is Neil McAlister, and I'd like to share a story or two with you. We've spent a while working on a microservice-based backend, and I think it's time to share what we've learned.

Service Core

A .NET Core Microservice backend

Hi! My name is Neil McAlister, and I'd like to share a story or two with you. We've spent a while working on a microservice-based backend, and I think it's time to share what we've learned.

Who We Are

First, it's important to understand who I'm talking about when I say "we". We are the Finavia DevOps team. We're a team of eight people: five developers, two designers, and one account manager. Collectively, we develop, maintain and operate the following on behalf of Finavia:

- The Helsinki Airport mobile app

- The Helsinki Airport web map

- The system that powers online parking reservations for the Helsinki airport

- The system that powers Helsinki Airport's Premium Lane security checkpoint experience

- A number of other miscellaneous things, including a few third-party integrations

...as well as the backend system that ties it all together.

We also work in close collaboration with the team that maintains and develops the Finavia website.

The Old Days

So now before I really get into things, I'm going to tell you a story. It's going to be a story of how things used to be before we moved into the land of milk and honey and microservices.

MOBA

In the past, there was once a backend server named MOBA, short for Mobile Application (Gateway). Its job was to support the airport's mobile application which was at the time, three mobile apps, one for each platform (iOS, Android, Windows Phone).

MOBA was originally written in 2012. In 2016, when mobile application development moved from three native applications to a single cross-platform iOS & Android application, MOBA was forked, and all development proceeded on the newly-christened "MOBANew". The original MOBA remained in order to support the Windows Phone application.

For its entire lifespan, MOBA never had a test environment. The process of deployment was fraught with difficulty; manual and fragile, the project's readme included a 12-step process. Step zero was "try to do it at a time when no flights are going out", because deployment involved bringing the backend down. If the backend was down when a flight's status changed, those push notifications would never be sent. Not ideal.

As part of the work on the new cross-platform application, our client expressed a desire to be able to take payment and allow users to create accounts. Because MOBA's architecture was, as one former team member described it to me, "spaghetti-like" at the time, the decision was made to do that work as part of a separate service.

Transition to the Future

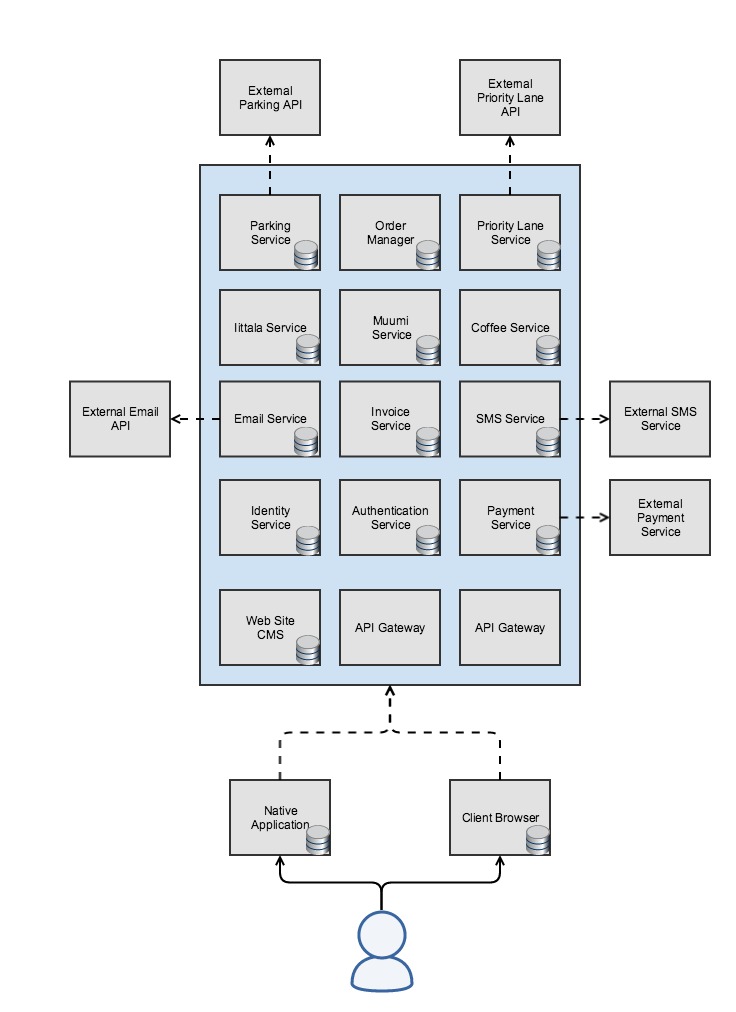

Payment Service Core

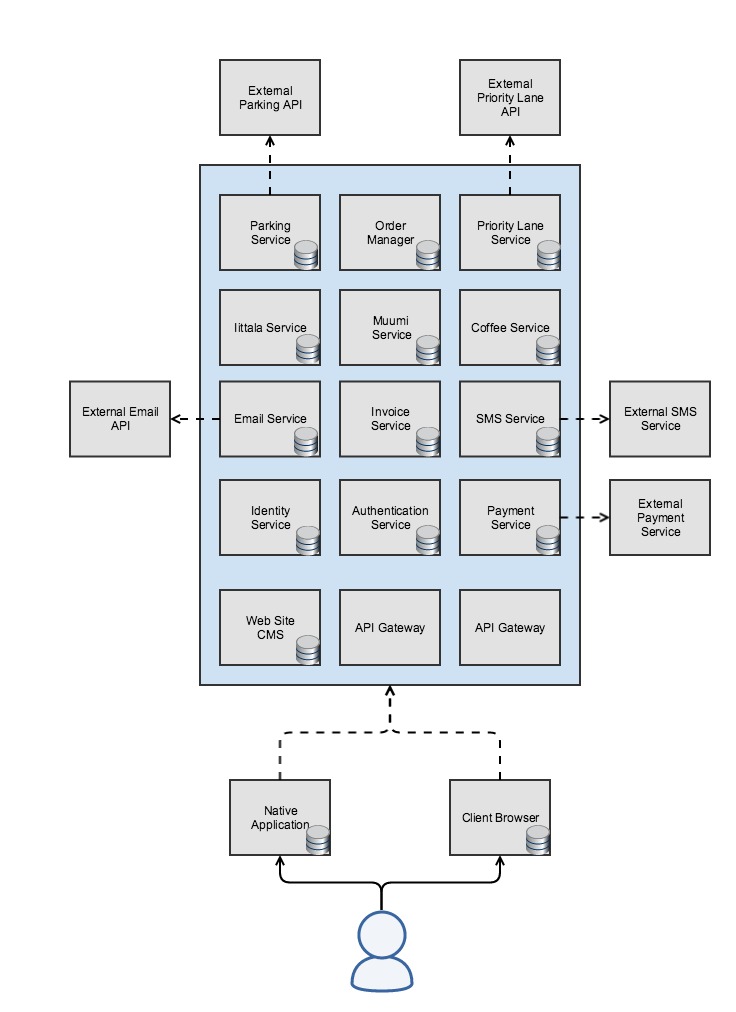

Enter Payment Service Core. Pitched to the client with the diagram above, its goal was primarily to be able to take payments in order to allow users to reserve parking spaces at the airport.

The idea was that it would be a microservice-based backend, where each microservice would communicate with each other over HTTP via RESTful APIs. The decision was made to write it in .NET 4.5 and C# instead of MOBA's Java for two reasons: first, one of the client's requirements was that it must run on Windows Server 2012. Second, it had to able to live up in the cloud, as there were distant plans to move away from physical servers to the cloud. We knew .NET Core was coming, and would be cross-platform and cloud-friendly, so .NET seemed like a natural choice.

Work began on May 11, 2016, and the first phase was completed by the end of October 2016. At the time, there were eight services: Payment, Authentication, Order, Email, SMS, Invoice, Mobile App Gateway, and User.

Continuous Everything

Payment Service Core had a few driving philosophies. One of its most important was automation. Everything had to be automated from day one: testing, building, and deploying. In addition, each individual microservice had to be able to be updated and deployed independently. The goal was to be able to add features and fix bugs both quickly and safely. None of us wanted a return to the days of copy-paste deployments, and timing deployments to avoid push notification interruptions. One of the oft-quoted targets was that we wanted a deployment process so simple, our product owner could do it.

The reality of this was a roaring success. Once everything was up and running, we had the capabilty to make multiple deployments to production daily. We even had enough confidence in our infrastructure to be able to deploy on Friday evenings without fear of weekend-ruining phone calls!

Our Process

(In the slides, I had a super-cool animation where each of these appeared one by one. Alas.)

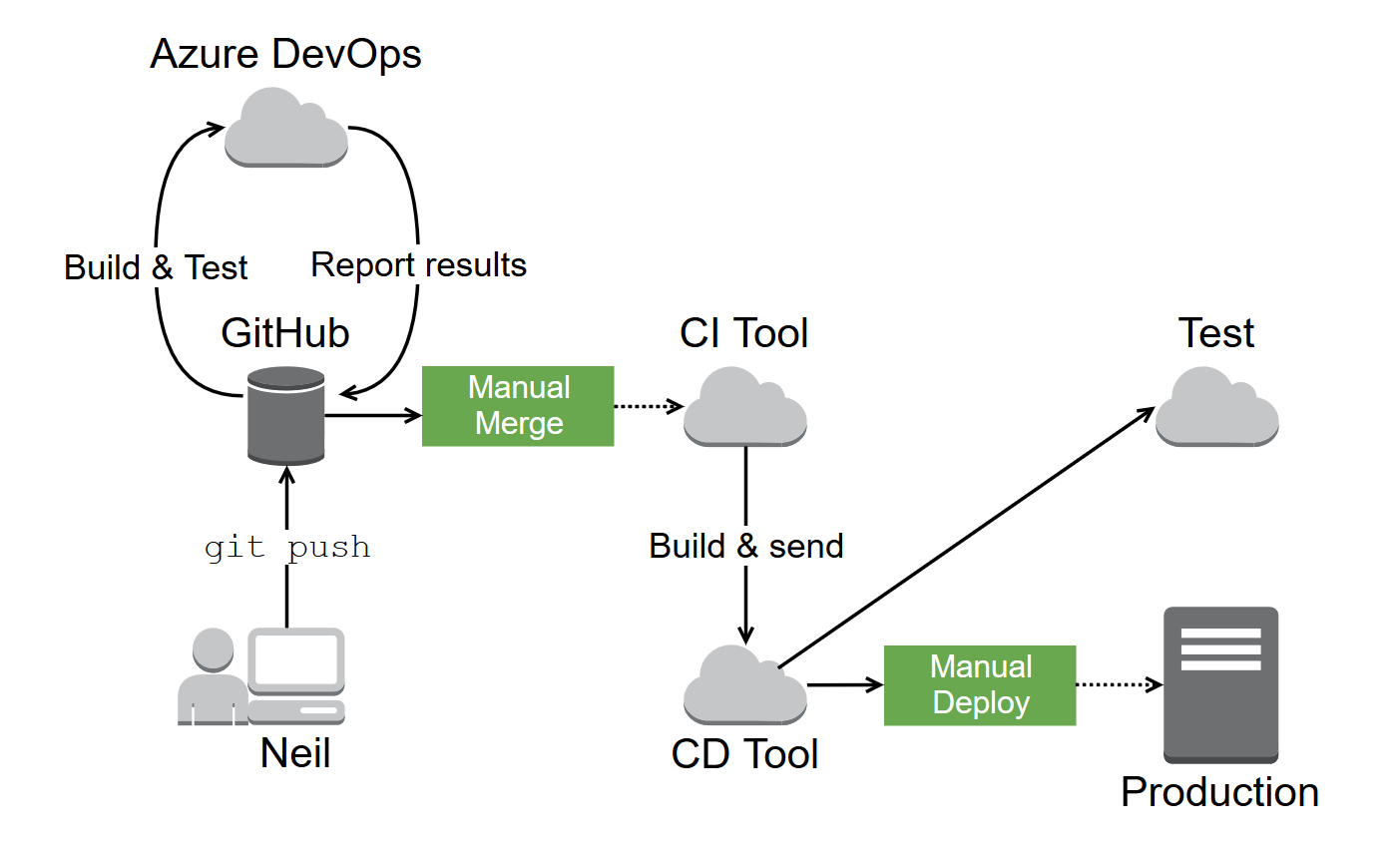

Today, our process is still heavily automated.

It begins with a developer (let's call him "Neil") writing code. Once he's done with his feature, or bug fix, he pushes his branch up to our repository in GitHub and opens a pull request.

Our build machine automatically sees this, and our Azure DevOps agent automatically builds the branch and runs our test suite on it. If it passes the tests, and another team member's review, Neil merges his code with the master branch.

Next, our CI (continuous integration) tool (Jenkins, at the moment) builds all the microservices that this pull request changes, and sends the built artifacts out to our CD (continuous deployment) tool (Octopus Deploy, at the moment) which will automatically deploy those changes to our test environment.

Finally, after some testing in the test environment, maybe a few rounds of bugfixing, and validation from our client if necessary, we decide the changes are ready to go live and press a shiny "send to production" button in our CD tool. Done!

The Road to .NET Core

2016 sees the release of the new cross-platform mobile application. Payment Service Core is so successful that new feature development continues on it, rather than MOBA. As we enter 2017, Payment Service Core is handling a wide enough variety of things that we drop 'Payment' from its name and begin just calling it Service Core.

It's also around this time that .NET Core 1.1 is released and, after some time evaluating it, we make the call: we're ready to migrate. We decide to do so for two reasons: first, the aforementioned cross-platform capability that will let us run more easily in the cloud. Second, we get all the shiny performance improvements that come along with .NET Core.

We start migration work on May 2nd of 2017. Our decision to follow a microservice approach immediately pays off here, because we can begin migrating our services one-by-one, starting with the less mission-critical ones first. This allows us to take a measured, incremental approach to upgrading. We can find all the pitfalls, the footguns, and the landmines in small doses before we commit to upgrading our more important services.

A little over a year later, migration work is completed; every service is running on .NET Core. We weren't just upgrading during this time of course. We were also developing new features and fixing bugs in all the other services!

As for the process of upgrade itself, it wasn't that bad! Our first service upgrade took about two weeks of cautious experimentation and reading of documentation. By the time we migrated the last few, we could migrate one of our services in about two hours.

Our biggest pain points were the following four:

- ASP.NET Core dropped background jobs entirely. We eventually settled on Hangfire as a replacement, but it certainly wasn't drop-in. For a while, we just went without them entirely, and took the performance hit.

- Every controller action had to have its return types updated. Not complicated, but tedious and labor-intensive.

- The migration from Entity Framework (the ORM we use to communicate with out database) to Entity Framework Core. The default behavior behind table naming changed, and we had to write some custom code to deal with that in several places.

- Finally, the one that hurt the most: we had to completely reimplement authentication. Our old method relied on an OAuth implementation that ASP.NET 4 provided, which didn't exist in ASP.NET Core. This was especially scary because Authentication was such an important service. The only thing that made this marginally less terrifying was our extensive test suite for the authentication service.

Realities of Microservices

In the process of building and maintaining Service Core over the last three years, we've learned some things about microservices, which I will now present as gospel truth.

The first is that microservices bring about more inherent complexity. Instead of having a single thing you build and deploy and run, now you've got eight, or twenty, or a hundred. And all of these services need to have a way of talking to each other. And now you need to have some kind of infrastructure to build and deploy them, because there's no way you'll manage copy-paste deployments on a hundred tiny services.

For similar reasons, debugging multiple services simultaneously is complex. Instead of just hitting F5, now you need to make sure that all your locally-running services are talking to each other (and all actually running locally...).

A lot of the complexity of your backend is going to move, but it isn't going to go away. Microservices tend to have the advantage of being simple, because they only have a single responsibility. But now you've got to manage the complexity of a build system, and a deployment system, and figuring out how to make all these tiny little things talk to each other.

Finally, and this is a bit of a recent speed bump for us, but sharing code without duplication is hard! For a long time we had a single "Core" library that all the microservices used. But more recently, what if we had code that we wanted to share between only, 2 or 3 or 4 services? The solution, as it turned out, was just "write another library project, and only reference it in the 2 or 3 or 4 services that need it". But that's not something you even need to consider in a monolith-style project.

A Happier Present

Lessons Learned

So more broadly, what else have learned in our time developing and operating Service Core? Lots of stuff!

Automation was, and remains hugely important. Write tests! Make it easy to run those tests! Make things easy to build and deploy! Then make a robot do it, because you've got better things to do.

One big advantage of microservices is "swappability", which is a word I just made up. In practice, it's easy for us to swap out implementations under the hood with very little disruption. At one point, our client's contract with their old SMS provider ran out, and we had to swap to a new SMS provider on extremely short notice. We switched out the implementation under the hood of our interface, and redeployed the service. Users were none the wiser.

This one might get me tarred and feathered a little bit, but mono-repos are better. There's a story here: we actually started with a one-repo-per-microservice setup. Then, we had a developer join the project, and spend a month working on a new feature that had concerns across multiple microservices. When the time came to merge it in, he had a massive pile of merge conflicts. Turns out that for several of the services, he'd been working on code that was months out of date. It took a week to sort out all the merge issues. Shortly after that, we moved to a mono-repo and things have been lovely ever since. (Although do note: before the performance improvements brought by .NET Core and more recent versions of Visual Studio, opening up the big multi-project solution hurt.)

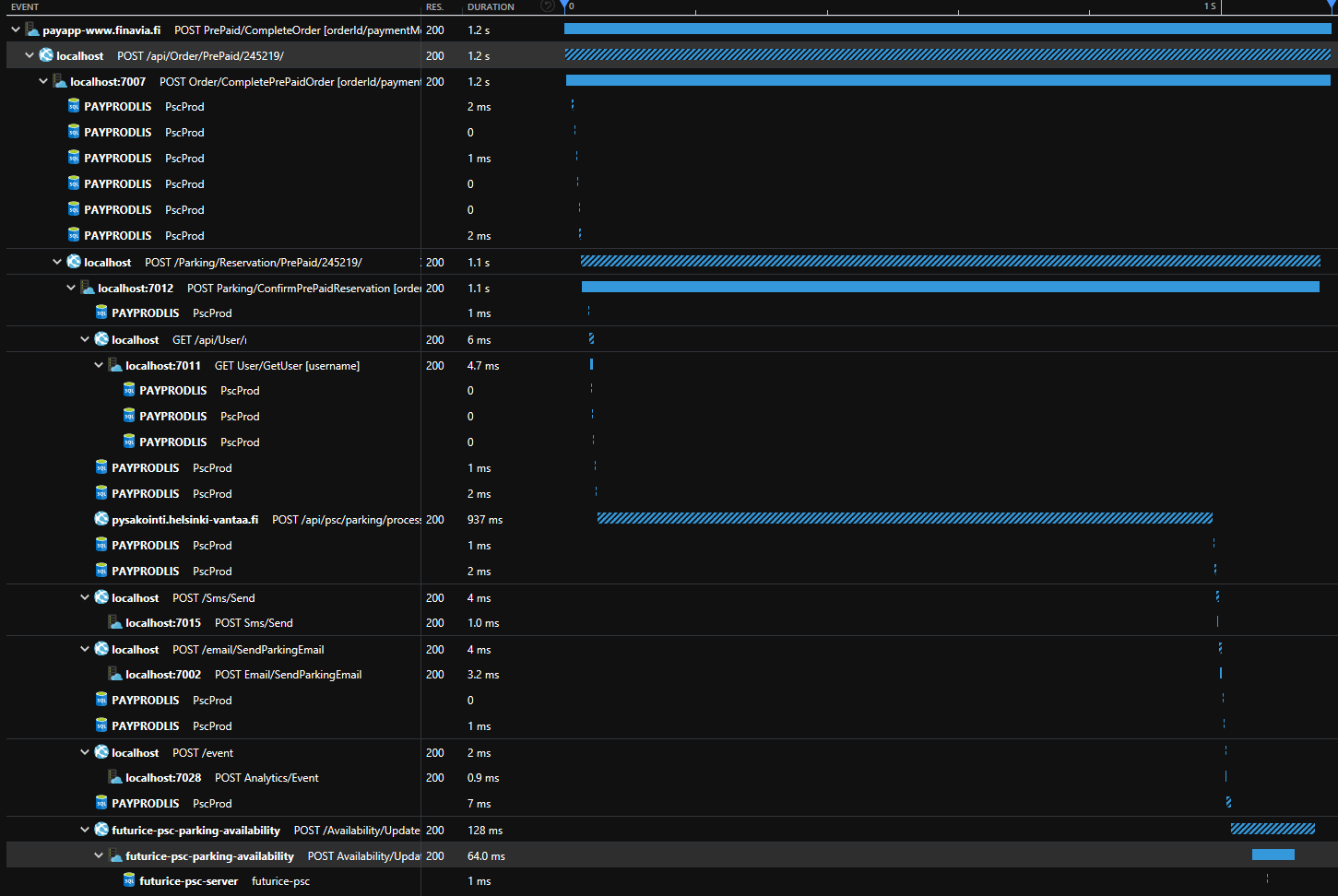

You need good logging. Need it! You've got requests coming in and wandering willy-nilly across any number of independent little microservices. Unless you've got a tool that can track a request from start to finish, you don't have a chance of tracking down what happened when something goes wrong. Or when something happened at all!

Predicting more tar and feathers in my future: if you can't deploy on Friday, you're doing it wrong.

The Boy Scout approach (leave things better than you found them) applies to more than just cleaning up code. It applies to infrastructure, it applies to project upgrades. When we were in the midst of our upgrade-to-.NET-Core frenzy, we had a rule: if you touch a project and it hasn't been upgraded, congratulations. You are now in charge of upgrading it.

Being able to (safely!) use the latest technology is really very nice. Because we can upgrade one little piece at a time, it's extremely easy for us to track the latest versions of the technologies we rely on and get all the fun new features. (Incidentally, we have an open PR in the repo waiting for .NET Core and C# 8. Very excited for C# 8's nullable references feature)

Service Core Today

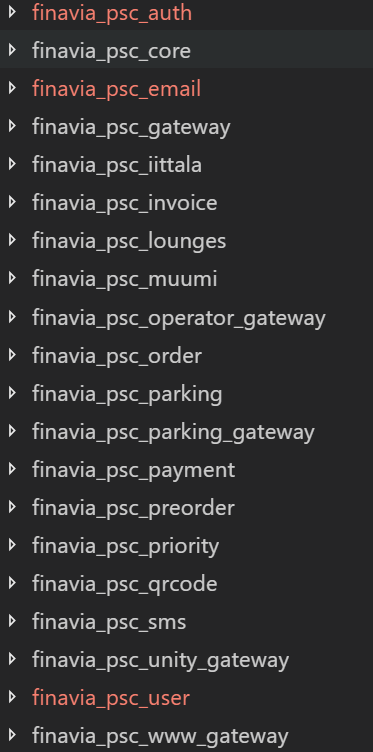

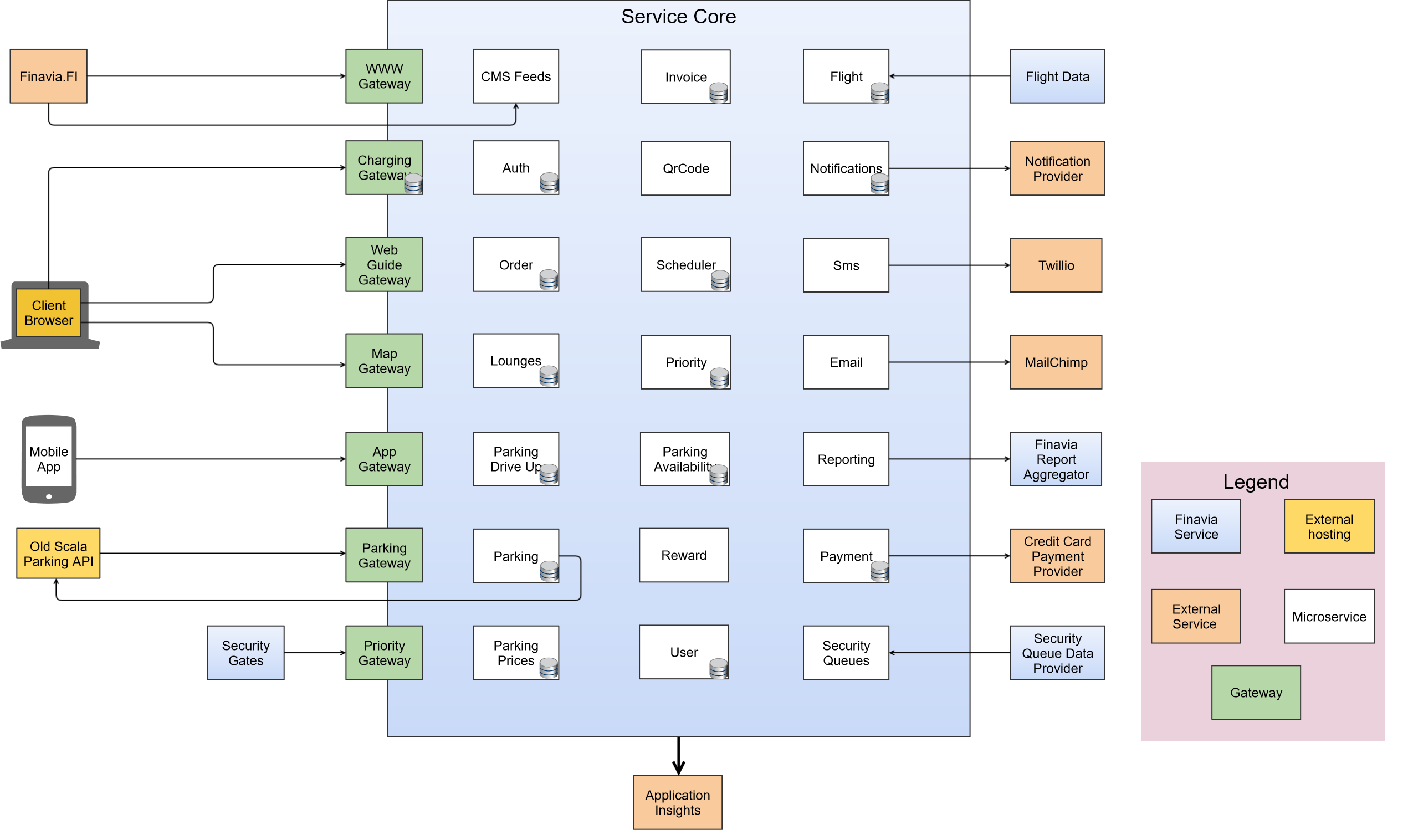

From its humble beginnings as Payment Service Core...

...Service Core has come a long way:

Today, there are 35 distinct services (only 28 of which are shown above due to NDA reasons). We add a new service at a rate of about one a month or so.

We've got 150 individual projects (defined as a .csproj-plus-files), all of which are written in C# 7, and running on .NET Core (or .NET Standard) 2.x. We're using ASP.NET Core 2.2, and most of our projects are just Web API projects (though we do have two React SPAs served by ASP.NET Core).

We are currently rewriting (almost done, in fact!) an older Scala backend in C# and bringing it into Service Core. Previously, Service Core talked it, but it was very much its own beast. In the process of the rewrite, it got split into about 3 individual microservices, all with their own clearly-defined responsibility.

Azure

I did promise some Azure talk, didn't I? Let's talk about Azure.

Azure powers our entire test environment. Every microservice gets a dedicated App Service app. Despite running in very different environments, our test and production environment are as identical as possible. In practice, when testing and developing, the only difference is in data sources.

Azure also powers our logging system (which I will talk a little more about shortly). It also powers our alerting, both for test and production, even though our production environment is "physical servers provided by the client".

Azure DevOps also runs our tests on every GitHub PR (every commit to the PR, in fact!) via the new GitHub Apps feature. We're even considering migrating from our current CI & CD tools to hosted Azure DevOps, so we don't have to manage our own VM, and Jenkins and Octopus Deploy instances anymore. Still waiting on a few features currently in preview, though.

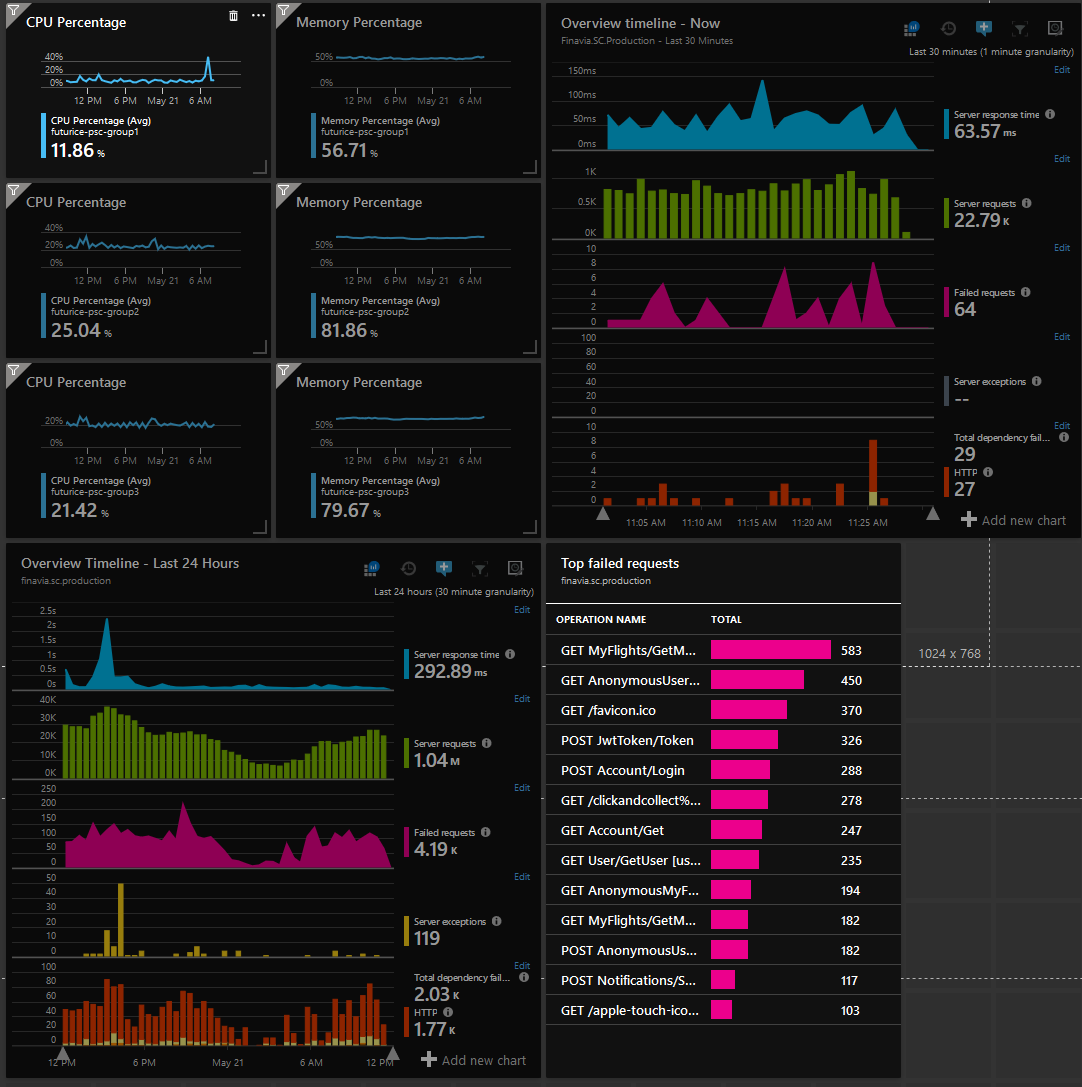

Application Insights

Have I mentioned that you need good logging? I think I have. Maybe.

We use an Azure service called Application Insights for all of our logging needs. And profiling. And failure analysis. And... you get the picture.

Application Insights is a log aggregation tool with a slick UI in the Azure Portal that's able to track everything your backend does. We have it set to track every request, exception, log entry, and call to external dependencies. It allows us to find an individual request, and drill down to see exactly how long it spent doing what.

It also has a sister tool named Log Analytics, that allows you to query and visualize the actual raw data that Application Insights uses. We've used this to do things like measure performance characteristics of our flight-information service, and graph that over time, to measure if code changes we were making were having an impact on performance.

The best part is that all of this works even if you aren't running on Azure--we use it in both our test and our production environment, even though our production environment is running on very non-cloud physical hardware. Application Insights provides SDKs for major platforms/frameworks, and for those that it doesn't, there is also a REST API.

Takeaways

So what? Well, there are three major points I'd like you to come away with:

- Microservices can enable agility. They've allowed us to stay up to date with new technology, and make changes and fix bugs fast, without compromising on safety.

- .NET Core is ready, and migration is feasible. We're running on .NET Core everywhere, and we haven't found any holes in the platform. Migration took some effort, but was very manageable.

- Azure can offer some useful benefits even if you're not interested in going all in.

It's been a fun journey over the past several years, and we've learned a lot.

Thanks!

Thanks for reading! I appreciate your time, and if you've got any questions or feedback, feel free to reach out. I'm available on Twitter @pingzingy, or you can see more on my company profile.

Neil McAlisterSoftware Developer

Neil McAlisterSoftware Developer